Dataset:

Human-human Interaction of a proactive shopkeeper interacting with customer

We have presented a fully-autonomous method that enabled a robot to reproduce both reactive and proactive socially interactive behavior solely from examples of human-human interactions. Both behavior contents and execution logic are derived directly from observed data captured by a sensor network.

The purpose of this page is to share the dataset we have collected from human-human interaction. To capture the participants' motion and speech data, we used a human position tracking system to record people's positions in the room, and we used a set of handheld smartphones for speech recognition.

Scenario

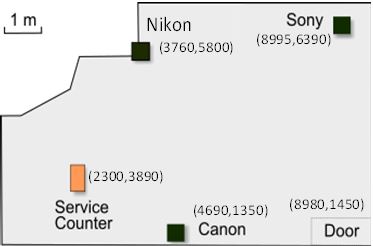

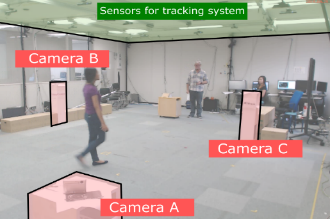

We chose a camera shop scenario as an example of the kind of repeatable interaction for which our data-driven technique would be most useful to automatically reproduce robot behaviors in a human-robot interaction. We set up a simulated camera shop environment in our laboratory with three camera models on display, each at a different location (Fig. 1), and we asked a participant to role-play a proactive shopkeeper. The shopkeeper interacted with participants role-playing customers, walking with the customers to different cameras in the shop, answering questions about camera features, and proactively introducing new cameras or features when the customers had no specific questions. We recorded the speech and motion data of both the shopkeeper and the customers during these interactions (Fig. 2).

|

|

| Figure 1. Map of the simulated camera shop. The numbers in the brackets indicate the position of the camera. | Figure 2. Environment setup for our study, featuring three camera displays. Sensors on the ceiling were used for tracking human position, and smartphones carried by the participants were used to capture speech. |

To create variation in the interactions, customer participants were asked to role-play in different trials as advanced or novice camera users, and to ask questions that would be appropriate for their role. Some camera features were chosen to be more interesting for novice users (color, weight, etc.) and others were more advanced (High-ISO performance, sensor size, etc.), although they were not explicitly labeled as such.

Dataset

Customer participants conducted 24 interactions each (12 as advanced and 12 as novice) for a total of 216 interactions. 17 interactions were removed due to technical failures of the data capture system and one participant who did not follow instructions. The final data set consisted of 199 interactions (denoted by a trial ID), with average duration of 3 minutes and 16 seconds per interaction.

The data are provided as CSV files. Each row corresponds to one second in the interaction, and it contains the following fields:

- TIMESTAMP (unixtime in seconds)

- TRIAL (integer)

- CUSTOMER_TYPE(novice or advanced camera user)

- CUSTOMER_X (position in mm)

- CUSTOMER_Y(position in mm)

- SHOPKEEPER_X (position in mm)

- SHOPKEEPER_Y (position in mm)

- CUSTOMER_SPEAKING ("SILENCE" or "SPEAKING")

- CUSTOMER_SPEECH (asr result)

- ACTUAL_CUSTOMER_SPEECH (manually corrected)

- CUSTOMER_SPEECH_DURATION (in ms)

- SHOPKEEPER_SPEAKING ("SILENCE" or "SPEAKING")

- SHOPKEEPER_SPEECH (asr result)

- ACTUAL_SHOPKEEPER_SPEECH (manually corrected)

- SHOPKEEPER_SPEECH_DURATION (in ms)

Dataset file [4.0 MB]

License

The datasets are free to use for research purposes only.

In case you use the datasets in your work please be sure to cite the reference

below.

Reference

- Liu, P., Glas, D. F., Kanda, T., & Ishiguro, H. (2017). Learning Proactive Behavior for Interactive Social Robots. (submitted to Autonomous Robots, currently under review).

- Liu, P., Glas, D. F., Kanda, T., & Ishiguro, H., Learning Interactive Behavior for Service Robots - the Challenge of Mixed-Initiative Interaction, Workshop on Behavior Adaptation, Interaction and Learning for Assistive Robotics (BAILAR), 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN 2016), New York, NY, USA, August 2016.

- Liu, P., Glas, D. F., Kanda, T., & Ishiguro, H.,

Data-Driven HRI: Learning Social Behaviors by Example from Human-Human Interaction, in IEEE Transactions on Robotics, Vol. 32, No. 4, pp. 988-1008, 2016.

doi:10.1109/tro.2016.2588880 - Liu, P., Glas, D. F., Kanda, T., Ishiguro, H., & Hagita, N., How to Train Your Robot - Teaching service robots to reproduce human social behavior, in Robot and Human Interactive Communication, 2014 RO-MAN: The 23rd IEEE International Symposium on, pp. 961-968, Edinburgh, Scotland, 25-29 Aug. 2014.

doi:10.1109/roman.2014.6926377

Related Papers

You might also like to check out our other works where we reproduce autonomous robot behaviors from human-human interaction:

Inquiries and feedback

For any questions concerning the datasets please contact: phoebe@atr.jp